Docker

I have to recreate my lost notes about docker. So in this entry will be the notes below. The notes are from two courses one from udemy (that I didn’t finish) and the other one is on platzi, here is the gh repo the platzi docker course author provided.

# helper shell variables WORKING_DIR=$(pwd) NOTES_EXEC_FOLDER="DockerOut" image_name=postgres volume_name=myvolume path_in_container=/var/lib/postgresql/data path_in_host=$WORKING_DIR/$NOTES_EXEC_FOLDER custom_image_name=myimage mkdir $NOTES_EXEC_FOLDER # BEGIN COMMAND EXAMPLES # --list containers processes docker ps # --list all containers (even if they are shutdown) docker ps -a # --pull an image from central hub repository docker pull $image_name # --run an image # in this case postgres is the <image_name> docker run $image_name # --run an image binding ports # where 3000 is the port of the container and 3002 is the port of the host # this will create a container with an unique id docker run -p 3002:3000 $image_name # --run an image with an alias or tag name # on this way we can refer to the container not only by its id... # but the specied tag name. docker run --name mydatabase postgres # --Volumes # creating a volume docker volume create $volume_name # if we want to persist data from directory in our host from the container # we can use two approaches # 1. -v for bind-mounts # 2. --mount to let docker handle a little bit more of security # bind-mounts approach example # this will reflect all changes in $path_in_container over $path_in_host docker run -v $path_in_host:$path_in_container $image_name # volumes approach example # ###note: for this you first have to have an existing volume docker run --name db --mount src=$volume_name,dst=$path_in_container $image_name # --copying files or dirs between host and container touch prueba.txt # create file on host workind directory docker run -d --name copytest postgres tail -f /dev/null # run a postgres container and add a tail for the container keep running until Ctrl-c docker exec -it copytest bash # enter to container mkdir testing # create directory in container docker cp prueba.txt copytest:/testing/test.txt # copy host file into the container docker cp copytest:/testing localtesting # copy directory inside the container to my host # ###note: with "docker cp" we doesn't need the container to be running. # --creating applications # build a custom image # ###note: for this you will need to have a DockerFile on the working directory docker build -t $custom_image_name .

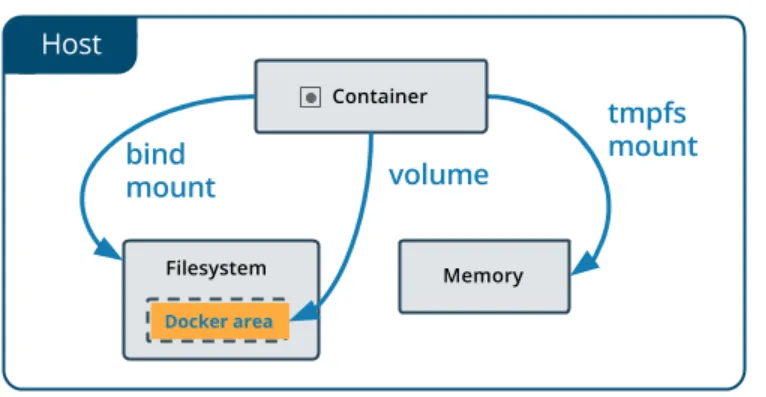

Volumes

Host: Where Docker is installed Bind Mount: storage the files in host machine in a persistent way (not secure). Volume: Storage the files on Docker area, where Docker manage the saved data (more secure). TMPFS Mount: Storage the data temporarely. Data is deleted when container stop running.

Developing applications

# this supposed you have an existing Dockerfile # on current working directory docker build -t <image_name> .

Below we have super basic example of a Dockerfile for a flask application based on an Ubuntu image.

FROM Ubuntu

RUN apt-get update && apt-get –y install python

RUN pip install flask flask-mysql

COPY . /opt/source-code

ENTRYPOINT FLASK_APP=/opt/source-code/app.py flask run

Networking

docker network ls

Above networks are the pre made ones.

- bridge: old concept, still there for retrocompatibility reasons.

- host: this one interfaces the host network. It’s a representation of the real network of my machine.

- none: we will use none when whe will want that our container doesn’t have any kind of outbound or inbound network on it from the outside of the container.

Creating a network that will have the ability to other containers connect to it.

docker network create --attachable <network_name>

Inspect a network.

docker network inspect <network_name>

Connect a container to a network.

docker network connect <network_name> <container_name>

When we connect a container to a custom created network, we can then reference a container address by container name. Look at following base example command, where we are using an environment variable inside a running container, you can see under MONGO_URL env variable that in the link we are pointing to db, instead of localhost, db is supposed to be an existing running container that is holding a mongo database.

docker run -d -name app -p 3000:3000 --env MONGO_URL=mondodb://db:27017/test <image_name>

Docker compose

It’s tedious to create manually each image and container and then create the network to later connect the desired containers. So for this, docker has something called docker compose, which is a tool to manage containers and their set up between them.

instead of having a Dockerfile docker compose uses a file called docker-compose.yml, in it we declare/define all the services we use for our custom application.

Below you can see an example of a docker compose file.

version: "3.8"

services:

app:

image: myapp # if we would like to build an image on the fly use: build: .

# where the dot after "build:" is the current directory

environment:

MONGO_URL: "mongodb://db:27017/test"

depends_on:

- db

ports:

- "3000:3000"

volumes:

- .:/usr/src

- /usr/src/node_modules # esto ignora la carpeta node_modules para que no se monte

# command: npx nodemon index.js # with this we can override the CMD declaration upon the Dockerfile of the service we are trying to build/run

db:

image: mongo

then to run the docker compose application:

docker-compose up # to run in dettach mode just add the -d flag

We can also use the ps command under docker compose and other utilities like showing the logs.

# list all processes docker-compose ps # show logs docker-compose logs # show logs of an speciic service docker-compose logs <service_name> # execute command on a container or service docker-compose exec <container_name> <command> # delete all generated resources of docker-compose docker-compose down # to build the services defined on the docker-compose.yml file docker-compose build # or you can also build an specific service docker-compose build <service-name>

When we are working in collaboration with other team members of a project. We can use a docker-compose.override.yml file to override paramaters defined under the docker-compose.yml base file.

version: "3.8"

services:

app:

build: .

environment:

ONE_VARIABLE: "some_value"

some random notes:

# some random commands docker container prune # delete all inactive containers docker rm -f $(docker ps -aq) # delete all inactive containers docker network ls # list networks docker volume ls # list volumes docker image ls # list images docker system prune # delete everything that is not being used docker run -d --name app --memory 1g platziapp # limit memory docker stats # see how much resources docker uses in the host machine docker inspect app # see details about a container, e.g. I can see if the container died due to lack of memory, etc.

Note: Whenever we have an exit code greater than 128, the exit was caused by an exception or an error not handled within the program. some commands for stop and/or kill a container.

Note: seems that “depends_on” is now deprecated, instead we have to use healtcheck for more details look at this

docker stop <container> # this will senda SIGTERM signal to the main process of the container docker kill <container> # this will send a SIGKILL signal to the main process

if you want to see the processes of a container.

docker exec <container> ps -ef

There are two forms to define the execution of a container inside the Dockerfile.

- exec form

shell form

FROM ubuntu:latest COPY ["loop.sh", "/"] CMD /loop.sh

FROM ubuntu:latest COPY ["loop.sh", "/"] CMD ["/loop.sh"]

So as you can see in the third line of the above two code blocks the difference is minimum, in shell form the main process will be bash and the process that is meant to be the main one would be the child of bash, but in the exec form the main process would be the program itself not being a child process of bash. Note: It is recommended to use the exec form, in this way we can shutdown a container gracefully.

For more details on Docker compose look at this.